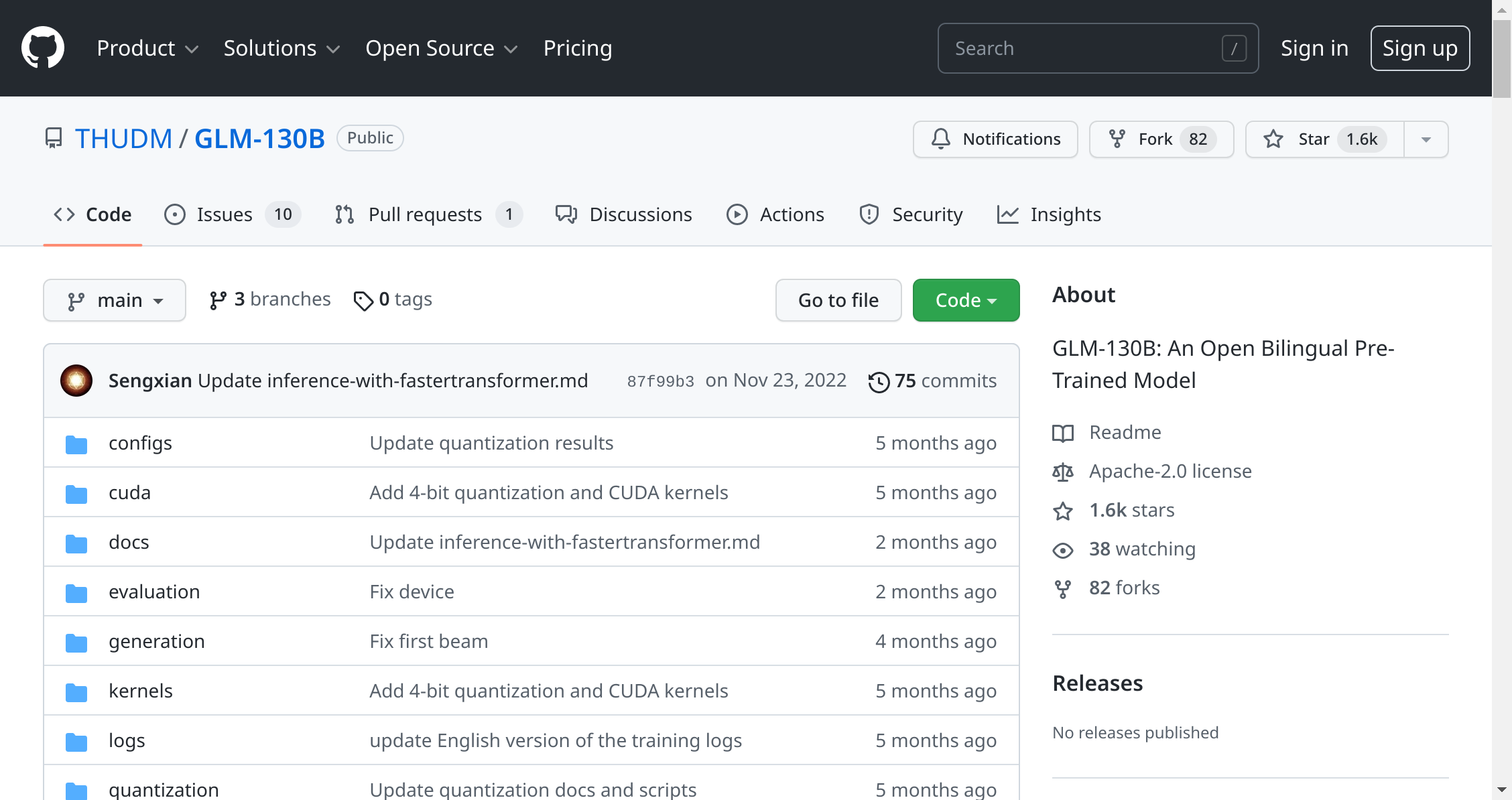

GLM-130B is an open, bidirectional dense model capable of both English and Chinese language processing. It has 130 billion parameters, trained using the General Language Model (GLM) algorithm. This powerful tool offers impressive capabilities for inference tasks on a single A100 (40G 8) or V100 (32G 8) server. With INT4 quantization, it can even be used on a single server with 4 * RTX 3090 (24G), without any noticeable reduction in performance. As of July 3rd, 2022, GLM-130B has been optimized to work with over 400 billion text tokens (200B each for English and Chinese). These tokens make it possible to perform natural language processing tasks with unparalleled accuracy and efficiency.