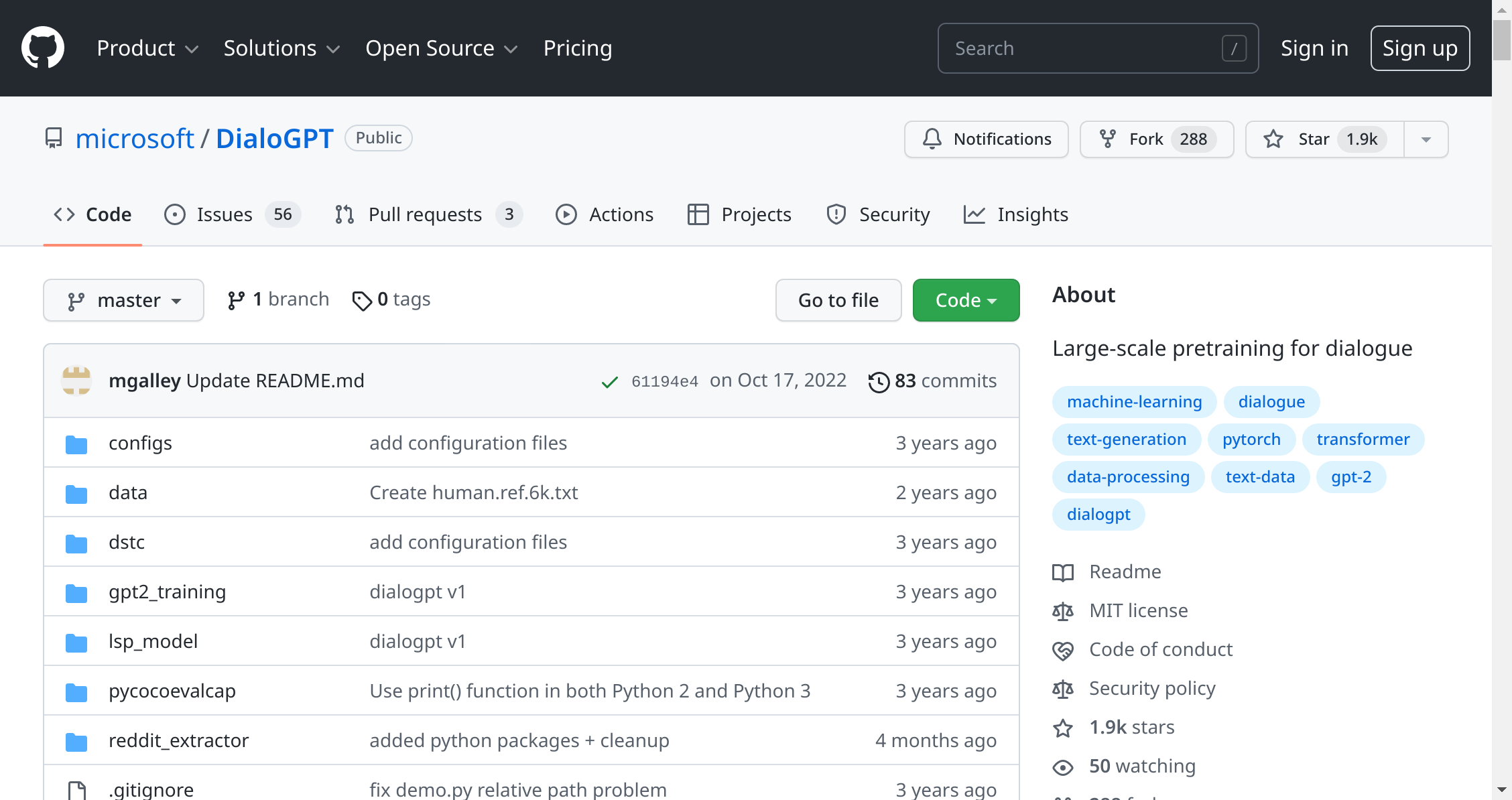

DialoGPT uses pretraining techniques to generate conversation responses, with data drawn from 147 million multi-turn dialogues extracted from Reddit discussion threads. This implementation is based on the huggingface pytorch-transformer and OpenAI GPT-2. DialoGPT incorporates a multi-layer transformer as its model architecture, allowing it to excel at providing conversational answers that are relevant to context and make sense in terms of common sense. For example, a user might ask “Who is the first president of the United States?”, and DialoGPT can provide an appropriate response: “George Washington”. It can also answer questions such as what the boiling point of water is (“I think it’s about 212 F”), or which one is bigger – sun or moon (“The sun”). Plus, since DialoGPT has trained on conversations spanning 2005-2017, it can still provide relevant answers even when dealing with topics that may not have been covered in training examples; for instance if asked who won the World Cup in 2018 (it will respond “Germany, I believe”) or whether Porsche could beat Tesla with their new Taycan EV (“I don’t think so. The Tesla is more powerful than the porsche.”). Lastly, our bot can even offer thoughtful insights into philosophical questions like what constitutes a good life (its response: “I think it’s that we’re all connected to our past lives and the meaning of life is to live how you want.”) Ultimately, we are very pleased with this research project between MSR AI and Microsoft Dynamics 365 AI Research team which created DialoGPT – so much so that we’d recommend giving it a try!